science

Entertained by scandalous deceiving melancholy, hurrah!

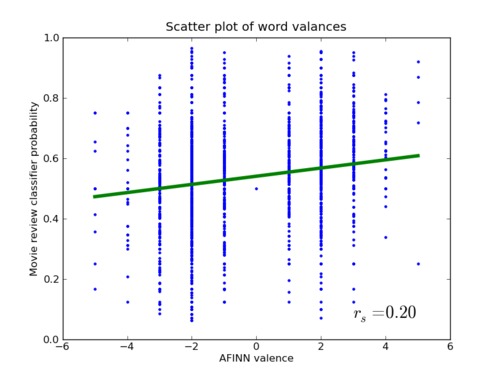

I my effort to beat the SentiStrength text sentiment analysis algorithm by Mike Thelwall I came up with a low-hanging fruit killer approach, — I thought. Using the standard movie review data set of Bo Pang available in NLTK (used in research papers as a benchmark data set) I would train an NTLK classifier and compare it with my valence-labeled wordlist AFINN and readjust its weights for the words a little.

What I found, however, was that for a great number of words the sentiment valence between my AFINN word list and the classifier probability trained on the movie reviews were in disagreemet. A word such as ‘distrustful’ I have as a quite negative word. However, the classifier reports the probability for ‘positive’ to be 0.87, i.e., quite positive. I examined where the word ‘distrustful’ occured in the movie review data set:

$ egrep -ir "\bdistrustful\b" ~/nltk_data/corpora/movie_reviews/

The word ‘distrustful’ appears 3 times and in all cases associated with a ‘positive’ movie review. The word is used to describe elements of the narrative or an outside reference rather than the quality of the movie itself. Another word that I have as negative is ‘criticized’. Used 10 times in the positive moview reviews (and none in the negative) I find one negation (‘the casting cannot be criticized’) but mostly the word in a contexts with the reviewer criticizing the critique of others, e.g., ‘many people have criticized fincher’s filming […], but i enjoy and relish in the portrayal’.

The top 15 ‘misaligned’ words using my ad hoc metric are listed here:

| Diff. | Word | AFINN | Classifier |

|---|---|---|---|

| 0.75 | hurrah | 5 | 0.25 |

| 0.75 | motherfucker | -5 | 0.75 |

| 0.75 | cock | -5 | 0.75 |

| 0.68 | lol | 3 | 0.12 |

| 0.67 | distrustful | -3 | 0.87 |

| 0.67 | anger | -3 | 0.87 |

| 0.66 | melancholy | -2 | 0.96 |

| 0.65 | criticized | -2 | 0.95 |

| 0.65 | bastard | -5 | 0.65 |

| 0.65 | downside | -2 | 0.95 |

| 0.65 | frauds | -4 | 0.75 |

| 0.65 | catastrophic | -4 | 0.75 |

| 0.64 | biased | -2 | 0.94 |

| 0.63 | amusements | 3 | 0.17 |

| 0.63 | worsened | -3 | 0.83 |

It seems that reviewers are interested in movies that have a certain amount of ‘melancholy’, ‘anger’, distrustfulness and (further down the list) scandal, apathy, hoax, struggle, hopelessness and hindrance. Whereas smile, amusement, peacefulness and gratefulness are associated with negative reviews. So are movie reviewers unempathetic schadefreudians entertained by the characters’ misfortune? Hmmm…? It reminds me of journalism where they say “a good story is a bad story”.

So much for philosophy, back to reality:

The words (such as ‘hurrah’) that have a classifier probability on 0.25 and 0.75 typically occure each only once in the corpus. In this application of the classifier I should perhaps have used a stronger prior probability so ‘hurrah’ with 0.25 would end up on around the middle of the scale with 0.5 as the probability. I haven’t checked whether it is possible to readjust the prior in the NLTK naïve Bayes classifier.

The conclusion on my Thelwallizer is not good. A straightforward application of the classifier on the movie reviews gets you features that look on the summary of the narrative rather than movie per se, so this simple approach is not particular helpful in readjustment of the weights.

However, there is another way the trained classifier can be used. Examining the most informative features I can ask if they exist in my AFINN list. The first few missing words are: slip, ludicrous, fascination, 3000, hudson, thematic, seamless, hatred, accessible, conveys, addresses, annual, incoherent, stupidity, … I cannot use ‘hudson’ in my word list, but words such as ludicrous, seamless and incoherent are surely missing.

(28 January 2012: Lookout in the code below! The way the features are constructed for the classifier is troublesome. In NLTK you should not only specify the words that appear in the text with ‘True’ you should also normally specify explicitely the words that do not appear in the text with ‘False’. Not mentioning words in the feature dictionary might be bad depending on the application)

Co-author mining for papers in the Brede Wiki

With the SQLite file generated from the Brede Wiki it is relatively easy to perform some simple co-author mining. First one needs to download the SQLite file from the Brede Wiki download site. Here with the unix program ‘wget’:

wget http://neuro.imm.dtu.dk/services/bredewiki/download/bredewiki-templates.sqlite3

For a start lets find the author listed with most papers in the Brede Wiki. Starting the sqlite3 client program:

sqlite3 bredewiki-templates.sqlite3

After setup (sqlite> .mode column, sqlite> .width 25) finding the most frequent mentioned authors is one line of SQL:

sqlite> SELECT value, COUNT(*) AS c FROM brede WHERE (template='paper' OR template='conference_paper') AND field = 'author' GROUP BY value ORDER BY c DESC LIMIT 10;

Finn Årup Nielsen 26

Gitte Moos Knudsen 23

Lars Kai Hansen 18

Claus Svarer 15

Olaf B. Paulson 15

Vibe Gedsø Frøkjær 13

Russell A. Poldrack 11

David Erritzøe 10

Richard S. J. Frackowiak 10

William F. C. Baaré 9

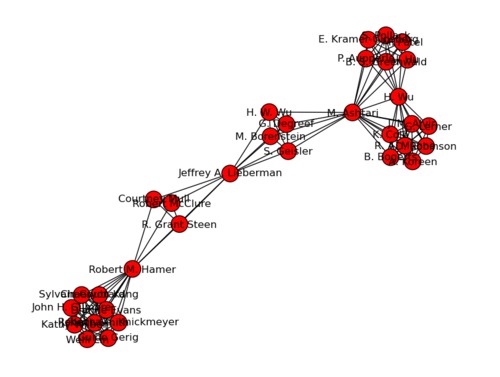

That is, e.g., ‘Russ Poldrack‘ is listed with presently 11 papers in the Brede Wiki. For performing a visualization of the co-authors one can query the SQLite database from within Python, first getting the pages with ‘paper’ and ‘conference paper’ template, then query for authors in each of these page and adding the co-authors to a NetworkX graph and draw the graph via GraphViz. The image shows the fourth connected component with 33 authors centered around Jeffrey A. Lieberman. The first connected component has 1490 authors. This number is much higher than the number of researchers in the Brede Wiki that each has a page on the own (520), see the Researcher category.

Also PageRank computation on the co-author graph is straightforward once the data is in the NetworkX graph:

import operator

for a, p in sorted(nx.pagerank(g).iteritems(), key=operator.itemgetter(1))[:-11:-1]: print('%.5f %s' % (p, a))

0.00181 Gitte Moos Knudsen

0.00176 Richard S. J. Frackowiak

0.00151 Russell A. Poldrack

0.00142 Finn Årup Nielsen

0.00137 Edward T. Bullmore

0.00134 Klaus-Peter Lesch

0.00134 Karl J. Friston

0.00130 Olaf B. Paulson

0.00128 Thomas E. Nichols

0.00121 Peter T. Fox

https://gist.github.com/1373887

(Note 2011-11-18: There is an error as ‘pid’ should have been ‘tid’, i.e., “SELECT DISTINCT tid FROM…”. Using ‘pid’ instead of ‘tid’ will find all authors on a wikipage so also counting those that are cited within the ‘cite journal’ template)

(2012-01-16: Language correction)

Poul Thorsen: a new Milena Penkowa?

In Denmark we have the hilarious case of the neuroscientist Milena Penkowa from the University of Copenhagen which involves embezzlement, forgery, the head of the university, allegation of scientific misconduct, personal ties to the former Minister of Science, to an employee of the Ministry of Science, inappropriate use of research funds, change of a Danish law because of anonymous questions, a documentary movie, personal ties to the documentary movie maker, and so on. I have a previous blog post on the case from February 2011.

Now we have a new case. That of Poul Thorsen. Like Penkowa he is/was an industrious researcher and he headed a large research group: North Atlantic Neuro-epidemiology Alliances (NANEA) at the University of Aarhus. I am trying to find heads and tails on this story and begun the Danish version of the Wikipedia article on Poul Thorsen. If you compared with the corresponding article on Milena Penkowa you will see that it is much smaller.

- Poul Thorsen gained large grants from the US Center of Disease Control to NANEA: almost 8 million American Dollars in 2000 and a renewal on over 8 millions in 2007. These are a very large sums. Apparently, he is suspected for falsifying documents from the US Center of Disease Control so University of Aarhus paid him 2 million US Dollars (believing that money to cover it would come). The university discovered this in the spring 2009. See Information.

- It is unclear who had the responsibility for administering the money. According to Ulla Danielsen the Danish Agency for Science, Technology and Innovation was the administrator until the University of Aarhus took over the administration in November 2009. If the university has paid Poul Thorsen before that date it seems that the agency has not done its job well.

- On his center in Aarhus Thorsen apparently employed a person that received a special salary support from the state. However Poul Thorsen adds some extra money on top and that is not legal, according to Information.

- NANEA is under the University of Aarhus and Thorsen was employed there. However, he has also been employed at the Emory University and this double employment was not approved by the University of Aarhus. See Information.

- In the Danish media there has been much less written about Poul Thorsen compared to Milena Penkowa. Penkowa has been keen on interviews before and after her fall from the throne, so the press has lots of interesting citations and photos and videos with her, see one here. For Thorsen there seems to be very few images (one?). I guess the difference in good picture material might be the reason for the discrepancy in press coverage. The case of Thorsen also has little juicy sex. In the case of Penkowa there was speculations around the Minister of Science and the head of the University. Though these speculations are very likely unfounded they nevertheless fueled the story that ran almost each day in February and part of March.

- Poul Thorsen has done research in autism and vaccine, see Thimerosal and the occurrence of autism: negative ecological evidence from Danish population-based data. Since Andrew Wakefield this area has been a minefield, where some contend that (mercury-containing) vaccination is harmful and causes autism. There are groups that are anxious about the issue. Age of Autism seems to be one. One blogger refers to this as “the anti-vaccine propaganda blog of Generation Rescue“. Age of Autism has not failed to write critical articles about Poul Thorsen. Even one of the Kennedys has written critically about the issue in Huffington Post.

- I think the issues around Poul Thorsen moneywise calls his scientific integrity into question. I haven’t heard much of this issue.

- Poul Thorsen has fairly few first author articles from recent years. The first author is usually the one with the hands in the data — and that has the possibility to falsify data. Thorsen is not first author on the Thimerosal/autism article. The collegue and medical doctor Kresten Meldgaard Madsen says that Thorsen could not change or compromise the data.

- According to Ulla Danielsen Poul Thorsen is charged with tax evation and the case is planned to begin 13 April 2011 (as I am writing this is today). There is a list for court cases in Aarhus. Among them is a case beginning 9:30 with the lawyer Jan Schneider. This might be the one. As far as I understand the court room was packed when Penkowa went to trial. I am not sure Poul Thorsen will attract as many, but we might hear more in the afternoon if any journalist attends.

WikiSym 2011 deadline approaching

Apparently the WikiSym 2011 (International Symposium on Wikis and Open Collaboration) research paper deadline is approaching: It is April 1st 2011 (Now how did that date come so quickly?). This year the symposium will be held at Microsoft Research Silicon Valley in Mountain View, California, which might enable you to catch a glimpse of Leslie Lamport.

I have previously been to two WikiSym meetings: One in Odense, Denmark in 2006, the other in last year when WikiSym was co-located with Wikimania (the Wikipedia meeting) in Gdansk. Both WikiSym meetings featured so-called Open Spaces, a type of formal informal meeting (to state it clearly) or “structured coffee-break”, where issues pertaining to the topic of the meeting can be discussed on-the-fly. It creates a much more interactive environment than the usual unidirectional scientific meeting where one person lectures in front of a ocean of silent listeners. After the first WikiSym I found “ordinary” scientific meetings somewhat alienating. In the Human Brain Mapping conference Tom Nichols has sometimes (out of the formal schedule) organized poster-walk-arounds with many-to-many interaction which helps, but still WikiSym creates a forum with a quite good interaction. I was surprised to find that Wikimania does not schedule Open Spaces. WikiSym also manages to attract a diverse set of researchers. It is not just engineers sitting with the heads in PHP and R code. You will find business school researchers and, e.g., researchers examining how wikis can be used in teaching.AFINN: A new word list for sentiment analysis on Twitter

In the Responsible Business in the Blogosphere project I have in my own sweat of the brow created a sentiment lexicon with 2477 English words (including a few phrases) each labeled with a sentiment strength and targeted towards sentiment analysis on short text as one finds in social media. It has been constructed with the help of word lists maintained by Steve DeRose (Steven J. DeRose) and Greg Siegle.

We have used my word list for sentiment analysis on Twitter in a few studies, the most notable so far is Good Friends, Bad News – Affect and Virality in Twitter. However, we have not been quite sure how well it performed compared to other sentiment lexicons such as ANEW. I have included a number of words frequently used on the Internet that I have not found in ANEW: Obscene words and Internet slang acronyms such as LOL (laughing out loud). So do these extra words make my word list better? ANEW is constructed by multiple persons rating a word and should be much better validated than my list. So maybe this list is better?

In a simple comparison between ANEW and my list I looked on the correlation with the sentiment strength (valence) of each word in the list. I have previously written about that issue. Such an analysis doesn’t really answer how good they are for sentiment analysis.

A few weeks ago Sune Lehmann mentioned that in their study they got tweets labeled for sentiment strength by the Amazon Mechanical Turk (AMT). Their study was the Twittermood study (or “Pulse of the Nation” study) that were much mentioned in the media, e.g., The New Scientist and Scientific American. See also their YouTube video.

Alan Mislove had obtained 1,000 AMT-labeled tweets that each was labeled by 10 AMT workers and rated from 1 to 9. Through Sune I got hold on the Mislove data.

With the Mislove data I have now made a more careful study of the performance of the different word lists and this study is now written up in the position paper A new ANEW: Evaluation of a word list for sentiment analysis in microblogs. The version on our departmental homepage has the code listing.

When I measured the performance of my application of word lists with a correlation coefficient (between the AMT “ground truth” and my predictions for the sentiment of the tweet) I found that my list and ANEW were quite ahead of the word lists in General Inquirer and OpinionFinder. To be fair to the two latter word lists I should say that I did not utilize all their information for each word, — only the strength polarity. My list was slightly ahead of ANEW. Whether this is statistically significant I don’t know as I didn’t get around to perform a statistical test.

I also tried SentiStrength Web service sentiment analyzer on the 1,000 Mislove tweets. This is not just a simple word list but is a program that has, e.g., handling of emoticons, negations and spelling. This Web service showed to be the best. Slightly ahead of my list and ANEW.

I have now distributed my 2477-word list from our department server (the zip file link). During the course of evaluation I found a few embarrassing mistakes in my previous list: I thought it had 1480 words but it turned out that only 1468 were unique! Words were also sometimes in alphabetic disorder. The new list should have no such problems.

(Typo correction: 2011-03-17)

(Update 2015-08-25: If you are a Python programmer you might want to take a look at my afinn Python package available here: https://github.com/fnielsen/afinn)

Metallica fan Milena Penkowa rocks Danish University

Accusations of fraud – both real and researcher fraud – target high-profile Danish glossy neuroscientist Milena Penkowa from the University of Copenhagen. It has been frontpage news in Denmark for some time now. Want an English introduction to the case as it stood in the beginning of January 2011 then read the Nature News article Nature News. Since then the case has grown. Now the central damaging allegation is that she falsified documents stating that a Spanish company was involved in an experiment with many hundreds of rats.

I have tried to aggregate the different sources on the Danish Wikipedia page about Milena Penkowa. I have not yet managed to assemble all the material. During the writing I stumbled on some “loose ends” and subjective thoughts about the case (I must warn you though. I have a conflict of interest: I am from the Technical University of Denmark – a competing university in Copenhagen. I also know some of the professors that just before yule sent a letter with a request for an investigation):

- An element of the Milena Penkowa case cannot be discussed in public because of “legalities”. Journalists and researchers generally know the details of that case but are prohibited from mentioning them in public. Information seekers that want to find out about it may either need to seek a person in-the-know or do a bit of triangulation with an Internet search enginge. Hmmm… Aren’t the Danish variation of free speech, The Ytringsfrihed, having a problem here?

- Some have questioned her overall scientific contribution. If you pubmed Penkowa you’ll see she has first-authored 33 PubMed papers and the total listing counts 98 PubMed papers. Most of her research seems to revolve around the protein metallothionein. The blog entry from Morten Garly Andersen states that “Penkowas first big article, which came in 2000 in the scientific magazine Glia has her coauthor, the Spanish Professor Juan Hildalgo, now retracted.” That statement seems not to be true: “Strongly compromised inflammatory response to brain injury in interleukin-6-deficient mice” is from 1999 and is with 114 citation her most cited article on Google Scolar. As far as I know this has not been retracted. As far as I can determine her Google Scholar h-index is 32. That is quite much compared to her young age. Quite impressive I would say. On the other hand among her first authored papers I find no journal I can recognize as a high-impact journal. From a medical researcher one would have expected at least one article in, e.g., The Journal of Neuroscience. So we may not be talking about ground-breaking science. She has two patents, but I am not presently aware of any application of these patents. Correction 22 August 2011: Here I am definitely wrong: She has an article in Journal of Neuroscience called CNS wound healing is severely depressed in metallothionein I- and II-deficient mice!

- Penkowa has claimed that she was under contractual obligations with a company. But can a researcher sign such a contract without the university approving it? Has the university approved such a contract? Has the university investigated whether such a contract exists?

- One commentator noted that Helge Sander exited as Minister of Science one month prior to Penkowa’s suspension from the university. Is that a coincidence?

- In the begining of January Chairman of the Board of the University of Copenhagen found that “Penkowa’s research is already being treated by the relevant authority, The Danish Committees on Scientific Dishonesty (DCSD)”. I presume he no longer will accept his own statement as the university has involved the police in the investigation?

- The university has reported Penkowa for falsifying documents. Even if the allegation is correct it is probably the case that the police cannot do anything about it because too many years have passed. The alleged falsification have supposedly taken place back in 2003. A document falsification allegation case that expired has recently happened for a Danish businessman with a high-profile politician wife. In that case the Danish police simply rejected the case.

- Fraud? What fraud? The university has reported Penkowa to the police for fraud (real fraud – not science fraud). But to commit fraud you need to gain value from it. If the allegation is correct what she gained is not clear. One “gain” was to avoid being dragged through a scientific dishonesty process, but is that a “gain” in the sense of that paragraph of the law? Has the university lost any money on that? It is probably not the case that she gained her degree based on the documents – she simply left out the problematic study from the disertation. So fraud? What fraud?

- Her collaborator fra Barcelona has said little. Penkowa has two papers in Glia from 2000: Impaired inflammatory response and increased oxidative stress and neurodegeneration after brain injury in interleukin-6-deficient mice and Metallothionein I+II expression and their role in experimental autoimmune encephalomyelitis (as far as I can see the contested table is Table 1 with 784 rats. The method section reads “Female Lewis rats, weighing 180-200 g, were obtained from the animal facilities of the Panum Institute in Copenhagen”). I find his name on both papers. Has he anything to say? If Copenhagen researchers find it strange that over 700 rats have been used in a study, why does this Spanish collaborator and co-author not find that strange? Now, hold on! Hold on! On the 8th February 2011 he actually came forward: According to Danish news the Spanish collaborator has asked the editor of “Glia” to retract the article. So it clarifies that aspect. Then February 9th Danish news paper BT got a strong statement from the Spanish researcher saying he is not a second in doubt that she has lied about the rats and he used words such as “not a friendly person”, evil and demanding.

- If Penkowa writes “Female Lewis rats, weighing 180-200 g, were obtained from the animal facilities of the Panum Institute in Copenhagen” in her paper then why was the university satisfied with Penkowa’s explanation that part of the rat study was performed in Spain?

- IMK Almene Fond has supported Penkowa with 5.6 million kroner (around 1 million American dollars). They have demanded (some of) the money paid back. According to Politiken the foundation accepted to pay salaries (that had been paid), but not travel expenses and restaurant bills, bills to laywers and expenses for patent application as well as office funitures, cloths, (office?) rental, hardware and software etc. Now a foundation can put any kind of restriction on the use

of its donated money. But its seems strange that a foundation given such a large grant does not support travel expenses and restaurant bills in connection with research. In standard research grants you usually get money to exactly that: Money to travel to scientific conferences, money to pay for hotel and food while you outside the country to the conferences, money to pay for food in you home country if you have lunch or dinner with foreign scientific visitors or internal scientific meetings within the group. Has the university paid money back to the foundation just to be on good terms with them for prospect of future grants? That might be a good strategy, but is that legal? The question may be answered as our national financial auditor Henrik Otbo now will examine this aspect - Milena Penkowa received the EliteForsk prize. It is unclear who promoted her. Ralf Hemmingsen ok’ed it even though he must have known about the suspicions against Penkowa. Minister of Science Helge Sander has personal ties to Penkowa. Has there been a direct or indirect pressure from Sander on the people in the nomination committee? Who can investigate a former minister? Surely not the university.

- Penkowa stated in a letter that she had been to a funeral following a traffic accident involving her mother and sister. At the later party at the university her mother showed up. Did any one at the university remember the letter? Did they write it off as a white lie composed by a stressful person?

- Ralf Hemmingsen has apologized for the treatment the three members of the Penkowa’s original 2001-2003 doctoral committee got. However, it still an open question if committee members did a reasonable scientific job. Prominent Nordic neuroscientists Per Andersen and Anders Björklund critized the work of the committee. So where does that leave us? Was the work of the committee not good enough? Did Andersen and Björklund not get enough material or time to evaluate. Is Andersen and Björklund’s criticism unfounded? Should we have an investigation of the investigation of the investigation?

- Committee members said in 2011 they investigated the possibility of submitting Penkowa to the Danish Committees on Scientific Dishonesty back in the early 00s but were adviced not to do so as it could be regarded as a breach of confidentiality. Apparently the members reluctance to call in DCSD put Ralf Hemmingsen in a catch-22 and was the reason he called in the investigation with Andersen and Björklund. Is the claim of the committee members really true? Shouldn’t such members be allowed to submited to DCSD?

- Some have critized that Ralf Hemmingsen for not involving Andersen and Björklund in the investigation about the 784 rats. But is that critique fair? The investigation would involve looking through bureaucratic documents (bills, invoices, lab reports) and really not scientific material. Do the critics think that busy widely known neuroscientists Andersen and Björklund should spend their valuable time looking into such things?

- Penkowa’s latest statement from February 12th says the following: “That company has of course existed, like the persons, that at that time was involved and performed the experiment, also existed. The university called the company to get it confirmed. The one, Weekendavisen has called in 2010, is in all likelihood not the same person”. So either the newspaper Weekendavisen and the Spanish lawyer the University of Copenhagen employed for investigating the whereabouts of the existing or nonexisting Spanish company have made a major blunder or Penkowa has now shown a considerable strained relationship with reality.

Care for a bit more science gossip? Here is some in Danish provided by

a commentator:

Nu er jeg så gammel, at jeg husker en sag for omkring 40 år siden, hvor en kvik og dejlig dame blev “båret frem” til en medicinsk doktorgrad af ældre “velgørere” på Københavns Universitet. Bagefter var der nogle unge forskere, som pillede doktorgraden fra hinanden – med en hel del røde ører til følge. De unge læger blev i øvrigt bagefter blacklistet som hævn, så vidt jeg ved. Det kunne man gøre dengang.

Willy Johannsen,

http://www.b.dk/berlingske-mener/snyd-og-ansvar?page=2

Photo: A Mazda MX-5 roadster. A photo by Mauricio Marchant from Wikimedia Commons with license CC-by-sa. Penkowa has a similar car and has been photographed in it a couple of times.

(Typo fix: 14. February 2011)

(Factual correction: 22. August 2011: I was wrong to state that she has not an article in a high-impact journal. The article CNS wound healing is severely depressed in metallothionein I- and II-deficient mice from 1999 published in The Journal of Neuroscience is what I would call a high-impact journal.)

On the number of blog posts and PET/MRI scanners

How many blog posts or status updates can you write?

The limited space in this slot means that there is a finite number of different status updates. After we reach this limit, we will only be able to repeat or copy. — Daniela Balslev

Facebook status messages can apparently only be 420 characters long. If you disregard capital letter, “foreign” characters and punctuations you get something like 27^420 different Facebook status messages. This was discovered after some discussion on Facebook between people in Daniela Balslev’s network. It is a bit difficult to compute with such a large number as it doesn’t fit in standard IEEE floating point representation that are ubiquitous in computing. However, the programming language Python is, with its “long int” representation, able to find the number:

>>> str(len('qwertyuiopasdfghjklzxcvbnm ')**420)[:5] '14886' >>> len(str(len('qwertyuiopasdfghjklzxcvbnm ')**420))-1 601

That is 1.4886 x 10^601. (This number does not reflect that consecutive spaces don’t really change the message)

So you are able to write an over 600-digit long number of Facebook statuses. — If they are suppose to be different. I guess it is not so interesting to repeat a message. It will take many many years before we repeat ourselves even if we type really fast – well over a 500-digit long number of years.

I recently ran into an announcement stating that they in Tübingen university hospital has got a combined MRI/PET scanner. Wow, I thought. This is really news. I had only heard of combined CT/PET scanners and wasn’t aware of that they could combine MRI and PET. I should give such a news a blogpost or at least a tweet.

As a read the announcement it occured to me that I already had done a blogpost on combined MRI/PET scanners some years ago. That was on the late ‘Machine Culture’ blog (practically the only memory the Internet has of that post is a reference on a page in the Internet Archive: “Simultaneous PET/MR brain scanner”). So it seems that I am beginning to repeat myself with around 3.5 years interval. That number is not in correspondence with the above calculate number.

I note the MRI/PET scanner they got in Tübingen is a “Ganzkörper” type. Maybe I should focus on “Ganzkörper” this time instead of repeating myself. Maybe I should also pop in and have my head examined: A Ganzkopf-MR-PET scan.

Navigating the Natalie Portman graph: Finding a co-author path to a NeuroImage author

Hollywood actress Natalie Portman I first remarked in the Mike Nichols 2004 film Closer. According to rumor on the Internet a few years before Closer she co-authored a functional neuroimaging scientific article called Frontal lobe activation during object permanence: data from near-infrared spectroscopy. She was attributed as Natalie Hershlag.

I have written before of data mining a co-author graph for the Erdös number and “Hayashi” number, and I wondered if it would be possible to find a co-author path from Portman to me. And indeed yes.

Abigail A. Baird first-authored Portman’s article, and the article Functional magnetic resonance imaging of facial affect recognition in children and adolescents has Abigail Baird and psychiatry professor Bruce M. Cohen among the authors. Bruce M. Cohen and Nicholas Lange is among the co-authors on Structural brain magnetic resonance imaging of limbic and thalamic volumes in pediatric bipolar disorder and Lange and I are linked through our Plurality and resemblance in fMRI data analysis, — an article that contrasted different fMRI analysis methods.

So the co-author path between Portman and me is: Portman – Baird – Cohen – Lange – me, which bring my “Portman number” to 4.

Navigating a graph is a general problem if you only know the local connections. There has even been written scientific articles about it, e.g., Jon Kleinberg‘s Navigating in a small world. When a human (such as I) navigate a social graph such as the co-author graph of scientific articles one can utilize auxillary information, here the information about where a researcher has worked, what his/her interest are and how prominent the researcher is (how many co-authors s/he has). As Portman worked from Harvard a good guess would be to start looking among my co-authors that are near Harvard. Nicholas Lange is from Harvard and we collaborated in the American funded Human Brain Project. I knew that radiology professor Bruce R. Rosen was/is a central figure in Boston MRI researcher, so I thought that there might be a productive connection from him, — both to Lange and to Portman. Portman’s co-author Baird is professor and has written some neuroimaging papers, so among Portman’s co-authors Baird was probably the one that could lead to a path. While searching among Lange and Baird co-authors I confused Bruce Rosen and Bruce Cohen (their Hamming distance is not great). This error proved fertile.

If I didn’t run into Cohen and really wanted to find a path between Portman and me then I think a more automated and brute force method could have been required. One way would be to query PubMed and put the co-author graph into NetworkX which is a Python package. It has a shortest path algorithm. Joe Celko in his book SQL for Smarties: Advanced SQL programming shows a shortest path algorithm in SQL. That might be an alternative to NetworkX.

(Photo: gdcgraphics, CC-by, taken from Wikimedia Commons)

Secure multi-party computations in Python?

I am not into cryptography, but I recently heard through Professor Lars Kai Hansen of secure multi-party computations, where multiple persons compute on numbers they do not directly reveal to each other, – only in encrypted form.

It turns out that Aarhus has done some research in that area and even released a Python package called VIFF (Virtual Ideal Functionality Framework).

The December 14th, 2009 1.0 release can be downloaded from their homepage. They provide a standard Python setup file:

python setup.py install --home=~/python/

The installation complained as it required the gmpy package which is in standard Ubuntu:

sudo aptitude install python-gmpy

With the package is example files in the ‘apps’ directory. They require the generation of configuration files where you specify hosts and ports for the ‘persons’ that need to communicate for secure computation. To keep it simple I stayed on localhost:

./generate-config-files.py localhost:5000 localhost:5001 localhost:5002

In three different terminals you can then type (with the working directory being viff-1.0/apps):

./sum.py player-1.ini 42

./sum.py player-2.ini 3

./sum.py player-3.ini 5

This example program will sum 42, 3 and 5. Each of the running Python programs then report the result:

Sum: {50}

The three values are private to each person (here each terminal) and the result is public. If you go in the middle of the Python program and write print str(x) thinking that you can reveal one of the private values (42, 3 or 5) you only get something like:

Share at 0x9751b4c current result: {805}

Close to pure magic.

- ← Previous

- 1

- …

- 6

- 7

- 8

- Next →